Modern systems generate vast amounts of telemetry logs, metrics, traces, and user interactions. The challenge is identifying meaningful anomalies in time to act. This is where AI testing proves invaluable. By using anomaly detection, AI can turn raw signals into early warnings about unexpected behavior that traditional tests may miss.

While conventional tests verify known routes, calculations, or UI states, AI testing identifies the unknowns: response time spikes, sudden drops in success rates for specific browsers, or unusual errors on low-memory devices. These insights help teams catch issues before they impact users and complement traditional test coverage effectively.

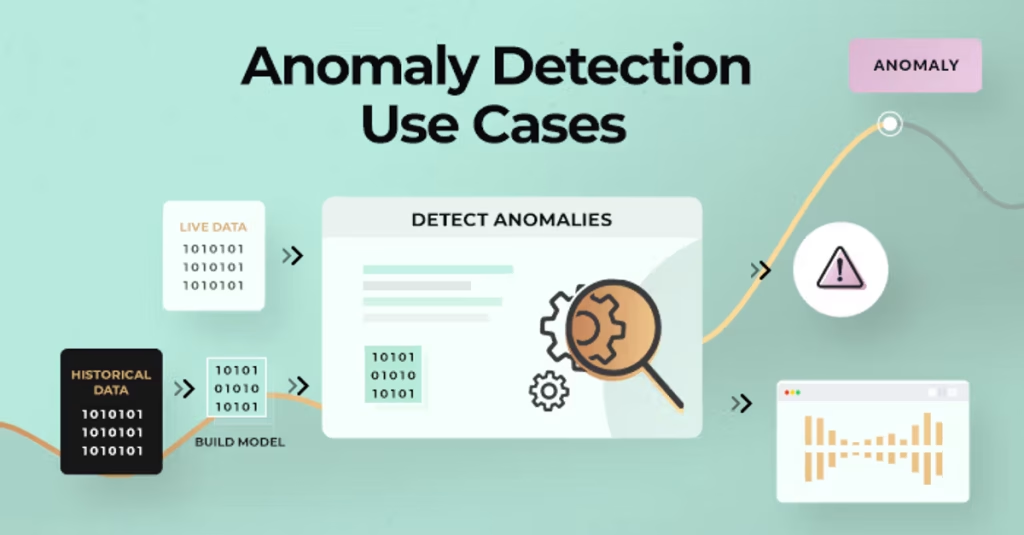

What does anomaly detection actually do?

An anomaly detector learns a baseline from historical behavior and flags new data points that deviate from it. The baseline can be simple, like moving averages with seasonality, or more advanced, like probabilistic models accounting for time of day, day of week, or the shape of a distribution. In practice, you feed the detector a stream of signals that correlate with user experience and correctness. The output is a concise set of alerts with scores and context, rather than a wall of raw logs.

For test teams, the benefits are tangible. AI testing allows you to stop manually scanning dashboards and start triaging a handful of scored deviations. By testing with AI, the system retains memory of your product’s typical behavior, learning patterns week-to-week and detecting anomalies that human eyes might miss. This ensures consistent insights and faster issue resolution, even as priorities shift.

Where to point anomaly detection first?

Start at the seams where a small defect can become a visible problem. Your top candidates usually include login, checkout, search, content save, file upload, and any path that interacts with third-party APIs. Monitor simple metrics that tell a story: success rates, error counts by code, client-side error rates by browser and OS, response time percentiles, and abandoned sessions for specific steps. For UI-heavy products, include page-level events such as long tasks, layout shift outliers, and navigation errors.

When performing AI testing in real browsers and devices, treat the environment as part of the signal. An anomaly that appears only in a single Chrome version or on older iPhones is as important as one that appears everywhere. By testing with AI and reporting anomalies along with these dimensions, triage becomes faster because the risk and affected audience are immediately clear.

How do anomalies fit with pre-release and post-release checks?

You can apply the same approach both before and after a release. Before shipping, run end-to-end flows against a staging build and let the system compare current behavior to the last known good build. After release, monitor the same signals in production with lower thresholds for change. This combination makes AI testing highly effective. Pre-release detection catches obvious regressions without involving real users, while post-release detection identifies environment-specific issues that appear only under real devices, caches, and networks.

When testing with AI in browser-based validation, it’s essential to keep evidence organized. Platforms that record videos, screenshots, and console or network logs for each run make it easier to connect anomalies to concrete examples, improving both analysis and remediation.

Signals, context, and why labels matter

A detector is only as effective as the context you attach to its alerts. A spike in HTTP 500 errors is useful, but a spike in HTTP 500 for the checkout service in region A on Edge for Windows is actionable. Structuring telemetry to include user agent, OS, app version, region, and feature flags enhances AI testing by making alerts precise and meaningful. Adding deployment markers to time series and test build identifiers to synthetic runs turns each alert into a short list of owners and a likely root cause.

Labels also improve testing with AI by accounting for seasonality. Your product may see high traffic on Monday mornings or low traffic on weekends. With proper context, the AI detector understands expected patterns, reduces false positives, and builds trust among engineers.

Choosing a modeling approach

You don’t need an exotic model to start AI testing. Many teams begin with robust statistics such as medians, median absolute deviation, moving quantiles, and seasonal decomposition. As your needs grow, you can incorporate density models, Bayesian changepoint detection, or shallow sequence models that learn common patterns without overfitting. The goal is not perfect prediction but to flag deviations from the recent past with enough confidence for humans to intervene.

For effective testing with AI, the model should explain itself clearly. Include details like the baseline window, magnitude of deviation, and comparison peers. If an alert cannot be explained in two sentences, engineers are less likely to adopt it.

Where does generative AI in software testing fit?

Generative models can greatly enhance AI testing by assisting with triage and explanation, even if they aren’t perfect for raw detection. Once an anomaly is flagged, a generative assistant can retrieve similar incidents, highlight likely hypotheses from change logs, and draft a concise investigation plan. It can summarize related logs and traces, so testers don’t have to sift through hundreds of lines to find the few that matter. It can also help produce clear incident reports with links to test runs, artifacts, and commits.

Modern cloud-based testing platforms take this a step further by combining generative AI with scalable infrastructure, allowing teams to run tests across thousands of real devices, browsers, and OS combinations simultaneously. Tools like LambdaTest KaneAI, a GenAI-native testing agent, allow teams to plan, author, and evolve tests using natural language. KaneAI integrates seamlessly with LambdaTest’s offerings for test planning, execution, orchestration, and analysis, bringing generative AI directly into everyday testing workflows.

With KaneAI, teams can leverage features such as intelligent test generation, automated test planning based on high-level objectives, multi-language code export, sophisticated conditionals in natural language, API testing support, and execution across 3000+ device and browser combinations. In testing with AI, generative models like KaneAI help reduce manual effort, provide actionable insights, and ensure faster, more reliable test outcomes, all while keeping humans in the loop to confirm risks and decide fixes.

Integrating anomaly detection into CI and nightly runs

Fold detection into the schedules you already use. On each commit, run a small set of synthetic journeys and compare them to the last successful build. Let the detector gate merge only when the deviation is large and repeatable. At night, run a broader matrix and lower the thresholds so that subtle drifts appear early. Keep artifacts from both so you can jump from an alert to a video or screenshot quickly.

When you are validating across browsers and operating systems, it helps to execute at scale on clean machines and to keep consistent viewports and fonts for comparability. Parallel sessions shorten wall-clock time and make it practical to run enough journeys to learn a reliable baseline. Over time, you will build a library of normal behavior that makes the detector stronger and the alerts calmer.

Interpreting alerts without chasing noise

Every alert should answer three questions. How big is the deviation? Who is affected? What changed recently? If your pipeline tags browser and OS, build numbers, regions, and feature flags, those answers appear with the alert. If your detector links to a small set of representative runs, a reviewer can confirm the behavior in minutes. Add a simple severity rubric so teams know whether to stop a release, watch a metric, or open a task for later.

Resist the temptation to mute noisy signals without understanding them. If a metric fires often, either your baseline window is wrong, your seasonality is unmodeled, or the signal is not meaningful. Fix the model or remove the metric. Keeping untrusted alerts will teach the team to ignore the system.

Privacy and governance considerations

Telemetry is still data about people. Be careful about what you collect and how long you keep it. Mask personal information before it leaves the client. Aggregate wherever possible. Use access controls so only the right people can view raw events. Document your data sources, retention policies, and the purpose of each signal. Treat detection artifacts like any other record that might be reviewed later. A short privacy note in your test plan is better than an unclear practice that grows quietly over time.

Conclusion

Anomaly detection is a practical way to extend scripted checks with sightlines into the unexpected. It is a natural use of AI in testing, because it turns raw telemetry into early warnings that humans can judge and fix. Start where defects hurt most, attach rich context to every signal, and keep the modeling simple enough to explain.

Use generative AI in software testing to summarize, retrieve history, and draft investigation steps, but keep people in charge of decisions. When you run journeys across real browsers and devices, record evidence so alerts map to something you can watch and trust. With a few steady habits and a clear baseline, you will find bugs that used to appear only in production, and you will fix them before users notice. For a practitioner’s look at anomaly-focused reporting in everyday automation, you can read LambdaTest’s guide on anomaly reporting in testing, which aligns closely with the approach described here.